Merges individual spectral channels of a multispectral LiDAR into a multispectral 3D point cloud.

Multispectral and hyperspectral LiDAR (MSL/HSL) systems are breakthrough in laser scanning. Thanks to MSL and HSL technologies, it is possible to acquire 3D point cloud data in more than one spectral channel. Therefore, n dimentional point cloud (denoting to additional radiometric features) could be achieved by MSL systems. In multispectral LiDAR systems, the number of points recorded in each channel could differ depending on the interaction of land object with different wavelengths. Hence, removing the duplicate points (i.e., points with the same coordinate) and then merging the spectral information are essential. On the other hand, combining the point clouds of each channels minimize the number of missing data. Therefore, after merging point clouds of MSL data, a comprehensive multispectral point cloud would achieved, featuring intensity information of all channels for each point in conjunction to coordinate. For more information on the multispectral LiDAR, please refer to the related literature.

In order to merge the spectral information of all channels and create MSL data, nearest neighbor search in 3D space is actively applied in each channel to estimate the intensity values in other wavelengths. Generating a multispectral point cloud from several monochromatic ones is divided into two phases. The following sections give a brief description of the necessary processing steps.

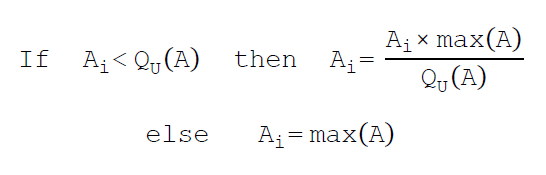

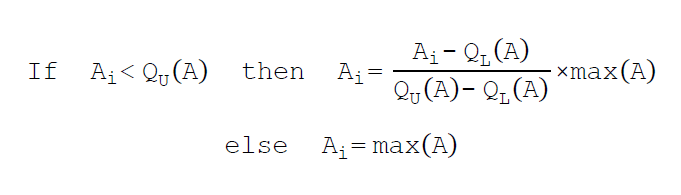

The first phase consists of importing all monochromatic point clouds into separate ODMs. It is recommended to stretch intensity values to improve radiometric contrast. Intensity stretching can be done using upper quantile or lower/upper quantile as are shown in Figure 1 and Figure 2, respectively. In these formula Ai is amplitude value of a point. QL(A) and QU(A) denote to lower and upper quantiles. The default values for QL(A) and QU(A) are 0.01 and 0.99, respectively.

The second phase involves amalgamating the point clouds of each spectral channel, which may have been stretched previously. This spectral merging process entails iterating through all points which have just single intensity value and searching for the nearest corresponding points in the other channels. Therefore a single ODM file is created. This file encompasses all non-redundant points from monochromatic point clouds, each with a single intensity value. Its creation is achieved through the importation of all ODM point clouds corresponding to laser channels. Afterwards, the missed intensity values for each point within the initial multispectral point cloud which are related to other channels, will be estimated by intensity values of the nearest neighbor points. This can be computed by calling AddInfo module multiple times and performing the spatial queries by defining two filters:

Eventually, the multispectral point cloud is computed.

| possible input | evaluates to |

|---|---|

| 1, true, yes, Boolean(True), True | Boolean(True) |

| 0, false, no, Boolean(False), False | Boolean(False) |

| possible input | evaluates to |

|---|---|

| 1, true, yes, Boolean(True), True | Boolean(True) |

| 0, false, no, Boolean(False), False | Boolean(False) |

To run the following example start an opalsShell and change to the $OPALS_ROOT/demo//multispectral directory

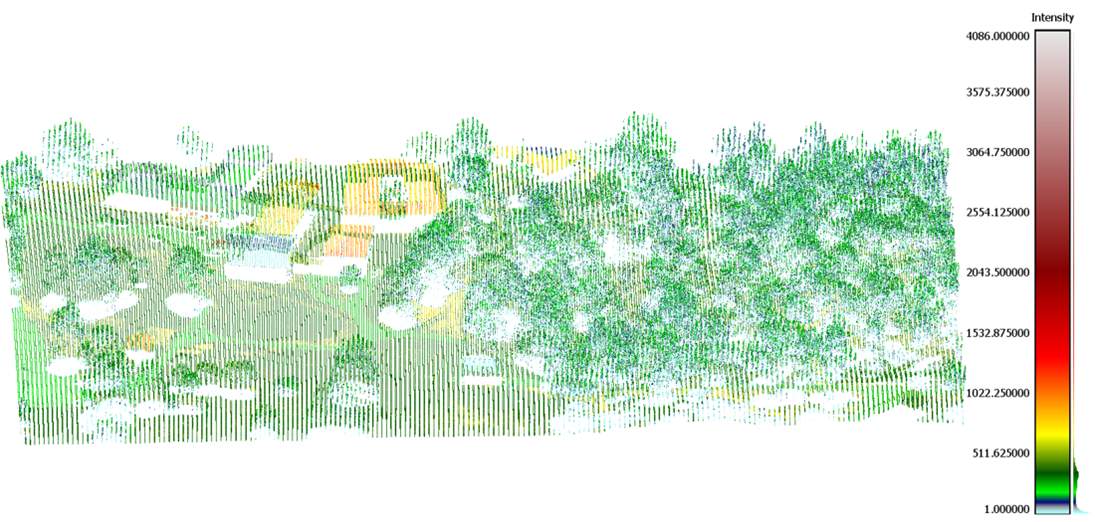

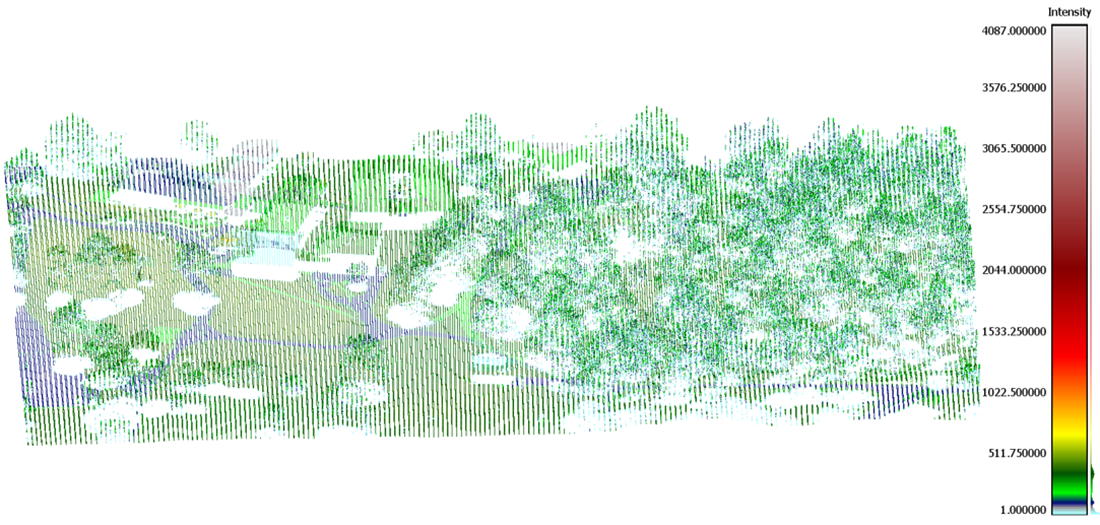

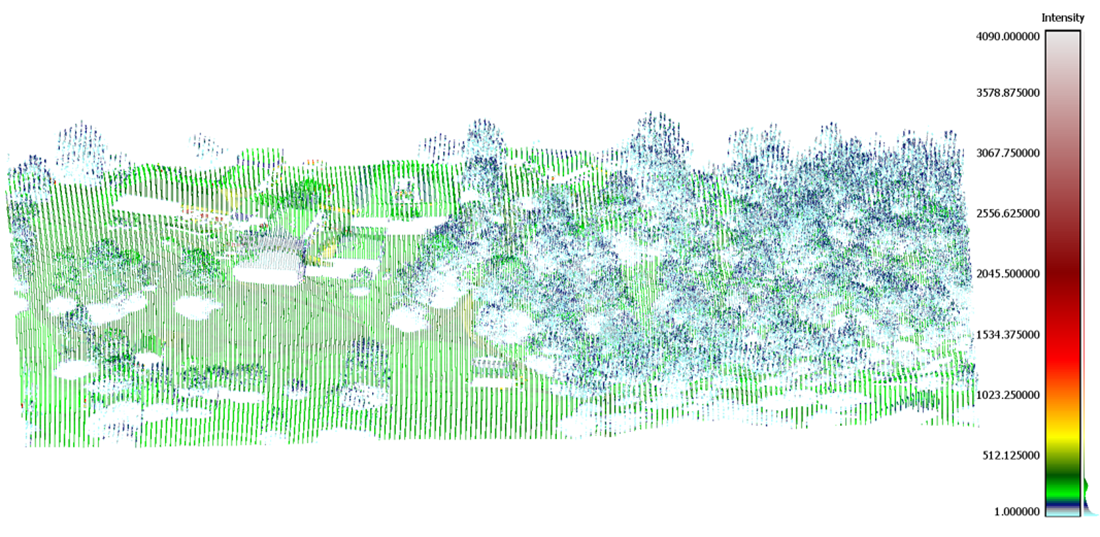

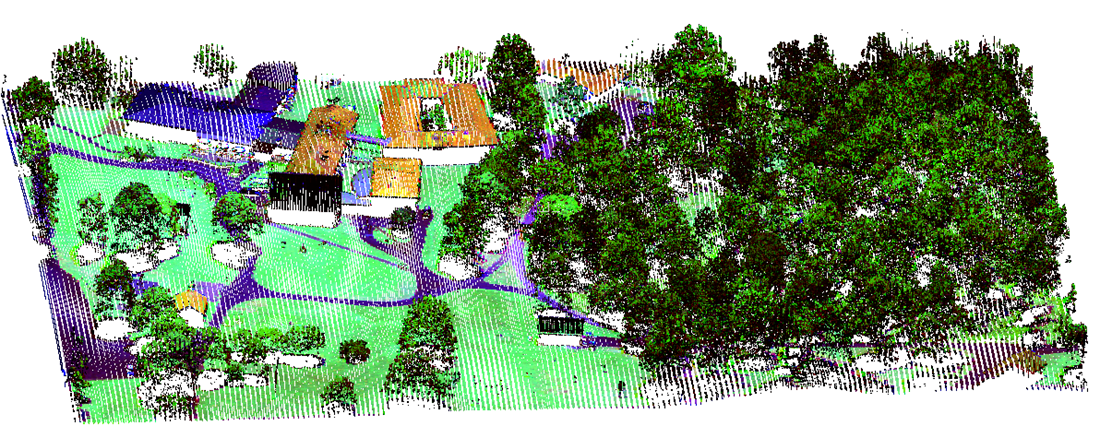

The MSL data used for the present example were gathered by a Teledyne Optech Titan sensor. This data includes three point cloud of three laser channels operating at 1550 nm (channel 1= SWIR), 1064 nm (channel 2= NIR), and 532 nm (channel 3= green). While for each point only a single intensity (amplitude) value is measured. The radiometric information of the two remaining channels can be queried via merging spectral information and generating the multispectral point cloud. The study area is an area in Sweden (Spatial Reference System: RWEREF99 TM, EPSG: 3006), as is shown in Figure 3-5. The files can be found in the $OPALS_ROOT/demo//multispectral directory

To generate the merged multispectral point cloud run the following command

The resulting point cloud visualised with RGB colors is shown below

Takhtkeshha, N., Mandlburger, G., Remondino, F., & Hyyppa, J. (2024). Multispectral LiDAR technology and applications: a review. Sensors, https://doi.org/10.3390/s24051669.

Takhtkeshha, N., Mandlburger, G., Remondino, F., & Hyyppa, J. (2024). A Review of Multispectral LiDAR Data Processing.